H2O.ai Blog

Filter By:

9 results Category: Year:Announcing H2O Danube 2: The next generation of Small Language Models from H2O.ai

A new series of Small Language Models from H2O.ai, released under Apache 2.0 and ready to be fine-tuned for your specific needs to run offline and with a smaller footprint. Why Small Language Models? Like most decisions in AI and tech, the decision of which Language Model to use for your production use cases comes down to trade-offs. ...

Read moreBoosting LLMs to New Heights with Retrieval Augmented Generation

Businesses today can make leaps and bounds to revolutionize the way things are done with the use of Large Language Models (LLMs). LLMs are widely used by businesses today to automate certain tasks and create internal or customer-facing chatbots that boost efficiency. Challenges with dynamic adaption of LLMs As with any new hyped-up thi...

Read moreTesting Large Language Model (LLM) Vulnerabilities Using Adversarial Attacks

Adversarial analysis seeks to explain a machine learning model by understanding locally what changes need to be made to the input to change a model’s outcome. Depending on the context, adversarial results could be used as attacks, in which a change is made to trick a model into reaching a different outcome. Or they could be used as an exp...

Read moreH2O LLM EvalGPT: A Comprehensive Tool for Evaluating Large Language Models

In an era where Large Language Models (LLMs) are rapidly gaining traction for diverse applications, the need for comprehensive evaluation and comparison of these models has never been more critical. At H2O.ai, our commitment to democratizing AI is deeply ingrained in our ethos, and in this spirit, we are thrilled to introduce our innovati...

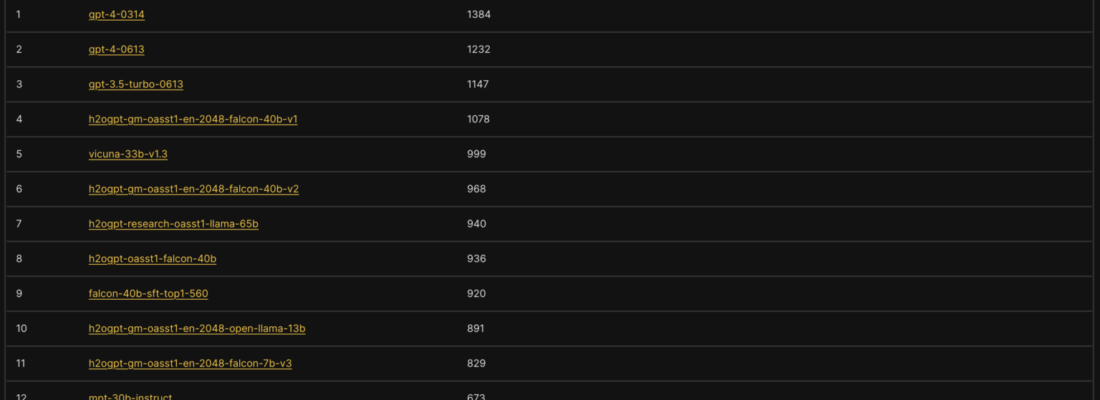

Read moreGenerating LLM Powered Apps using H2O LLM AppStudio – Part1: Sketch2App

sketch2app is an application that let users instantly convert sketches to fully functional AI applications. This blog is Part 1 of the LLM AppStudio Blog Series and introduces sketch2app The H2O.ai team is dedicated to democratizing AI and making it accessible to everyone. One of the focus areas of our team is to simplify the adoption of...

Read moreH2O LLM DataStudio: Streamlining Data Curation and Data Preparation for LLMs related tasks

A no-code application and toolkit to streamline data preparation tasks related to Large Language Models (LLMs) H2O LLM DataStudio is a no-code application designed to streamline data preparation tasks specifically for Large Language Models (LLMs). It offers a comprehensive range of preprocessing and preparation functions such as text cl...

Read moreDemocratization of LLMs

Every organization needs to own its GPT as simply as we need to own our data, algorithms and models. H2O LLM Studio democratizes LLMs for everyone allowing customers, communities and individuals to fine-tune large open source LLMs like h2oGPT and others on their own private data and on their servers. Every nation, state and city needs it...

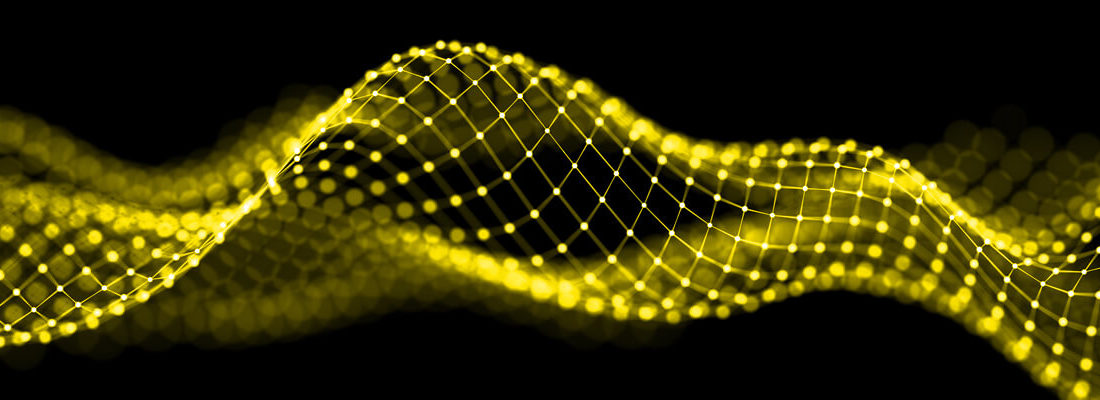

Read moreBuilding the World's Best Open-Source Large Language Model: H2O.ai's Journey

At H2O.ai, we pride ourselves on developing world-class Machine Learning, Deep Learning, and AI platforms. We released H2O, the most widely used open-source distributed and scalable machine learning platform, before XGBoost, TensorFlow and PyTorch existed. H2O.ai is home to over 25 Kaggle grandmasters, including the current #1. In 2017, w...

Read moreEffortless Fine-Tuning of Large Language Models with Open-Source H2O LLM Studio

While the pace at which Large Language Models (LLMs) have been driving breakthroughs is remarkable, these pre-trained models may not always be tailored to specific domains. Fine-tuning — the process of adapting a pre-trained language model to a specific task or domain—plays a critical role in NLP applications. However, fine-tuning can be ...

Read more